Archive

-

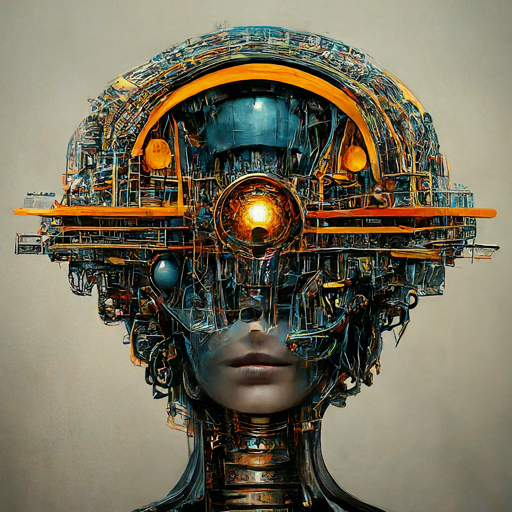

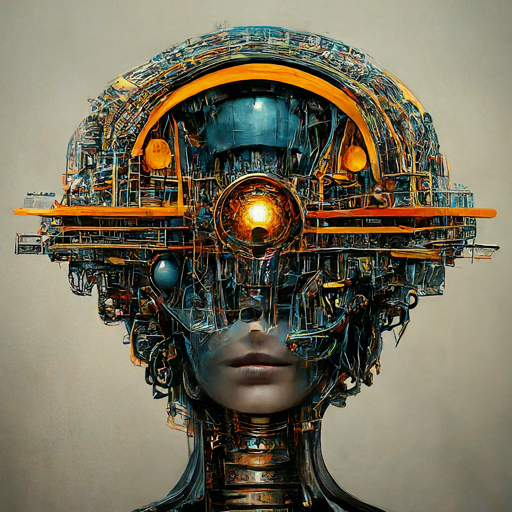

ChatGPT: The Future, Like It Or Not

A discussion on LLMs, their potential, limitations, and real-world applications in fields like medicine, law, and design, and a personal story about AI’s diagnostic capabilities.

A discussion on LLMs, their potential, limitations, and real-world applications in fields like medicine, law, and design, and a personal story about AI’s diagnostic capabilities.